-

AI FactoryAI FactoryAI Factory – already hereThe AI Factory is no longer a concept — it’s a reality.

-

NeoCloudNeoCloudAI Factory – already hereThe AI Factory is no longer a concept — it’s a reality.

-

SolutionsSolutions

-

CompanyCompany

AI and ML Inference

Maximize accuracy and speed for seamless integration into your systems

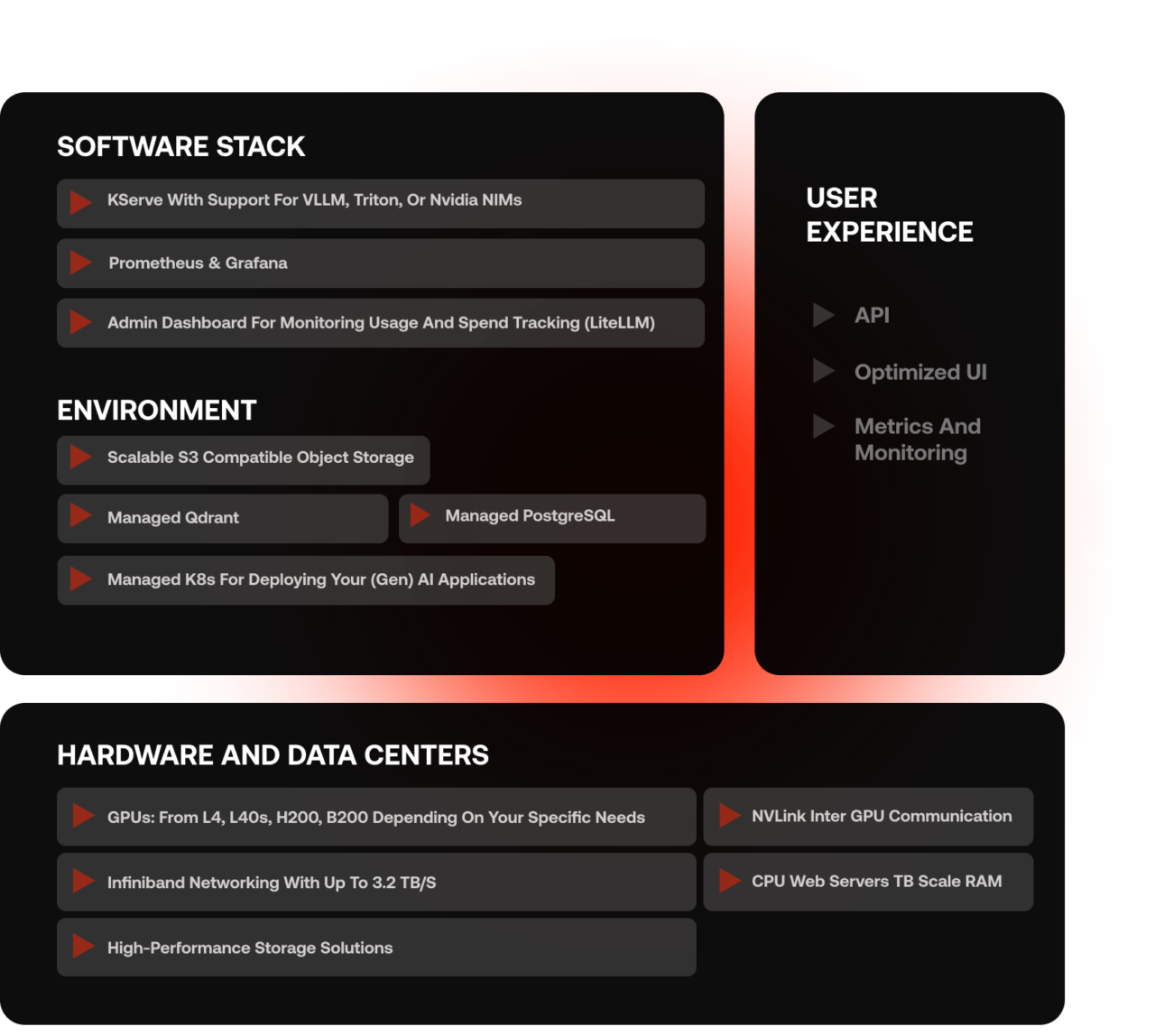

Built on Industry-Proven Technologies

Leverage a battle-tested AI infrastructure with Kubernetes, Kubeflow, KServe, Triton, and vLLM. Whether deploying transformers for inference or managing complex ML pipelines, our platform ensures scalability, flexibility, and performance without vendor lock-in.

Secure and Compliant AI Inference

Keep your AI operations fully private with isolated environments, strict access controls, and compliance-ready infrastructure. Your models and data stay fully sovereign, ensuring regulatory compliance and enterprise security.

Real-Time Monitoring and Observability

Gain full visibility into model performance with real-time inference monitoring, logging, and alerting. Integrate with Prometheus, Grafana, and OpenTelemetry to track response times, accuracy drift, and resource utilization.

Seamless Deployment for LLM-Powered Applications

Easily integrate and deploy your LLM-powered applications with LangChain, LangSmith, LangFuse, Orq.ai, and more. Whether you’re building AI agents, RAG pipelines, or enterprise chatbots, our infrastructure is optimized for seamless development and deployment.

Private AI full stack support

Tailor AI/ML models to your specific needs with full-stack support, including seamless partner integrations, a robust ecosystem, scalable infrastructure, and powerful hardware for optimal performance and flexibility.

100% Compliant, 100% European