-

AI FactoryAI FactoryAI Factory – already hereThe AI Factory is no longer a concept — it’s a reality.

-

NeoCloudNeoCloudAI Factory – already hereThe AI Factory is no longer a concept — it’s a reality.

-

SolutionsSolutions

-

CompanyCompany

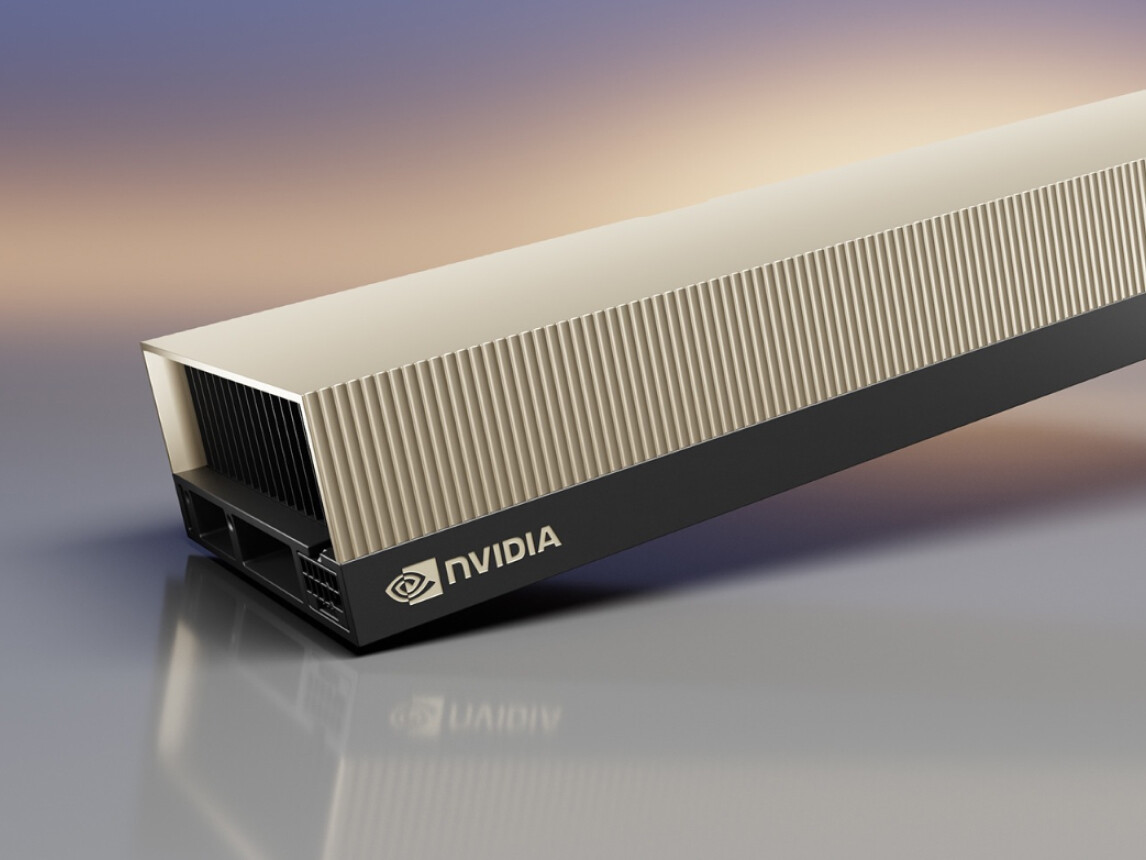

Dedicated GPU Infrastructure for production AI

Enterprise AI demands reliability, performance, accountability and European GDPR Compliance.

The problem isn’t access to GPUs. It’s running AI workloads in production on infrastructure never built for it.

European and sovereign

GPU infrastructure deployed and operated entirely in Europe, ensuring data locality, jurisdictional control, and long-term availability for regulated and strategic workloads.

Private

by design

Deploy GPU infrastructure in isolated environments for your Private AI workloads, with full control over data locality, access paths, and system configuration by design and at scale.

Sustained performance,

not best-effort

GPU instances and clusters are built according to NVIDIA Reference Architectures, delivering predictable throughput under continuous load, not just benchmark peaks.

Whitepaper

The Economics of GPU Clusters

In this whitepaper, we examine the key factors that define the cost of AI model training and explain how the quality of infrastructure streamlines running Inference efficiently to maximize return on investment.